LATIN HYPERCUBE VS MONTE CARLO FULL

References: View references in EconPapers View complete reference list from CitEcĪccess to full text is restricted to subscribers. We also present numerical studies that showcase the flexible incrementation offered by HILHS. We derive the sampling properties for HLHS designs and HILHS estimators. This overcomes a drawback of many LHS schemes that require the entire sample set to be selected a priori, or only allow very large increments. Based on this new construction, we introduce a hierarchical incremental LHS (HILHS) method that allows the user to employ LHS in a flexibly incremental setting. We present a new algorithm for generating hierarchic Latin hypercube sets (HLHS) that are recursively divisible into LHS subsets.

Latin hypercube sampling (LHS) is a robust, scalable Monte Carlo method that is used in many areas of science and engineering. Under every combination we’ve tested, the sample means are much closer together with the Latin Hypercube sampling method than with the Monte Carlo method.Journal of the American Statistical Association, 2017, vol. If you wish, you can change the mean and standard deviation of the input distribution, or even select a completely different distribution to explore. Select your sample size and number of simulations and click “Run Comparison”. The attached Excel workbook lets you explore the difference in the distribution of sample means between the Monte Carlo and Latin Hypercube sampling methods. And when you’re performing multiple simulations, their means will be much closer together with Latin Hypercube than with Monte Carlo this is how the Latin Hypercube method makes simulations converge faster than Monte Carlo.

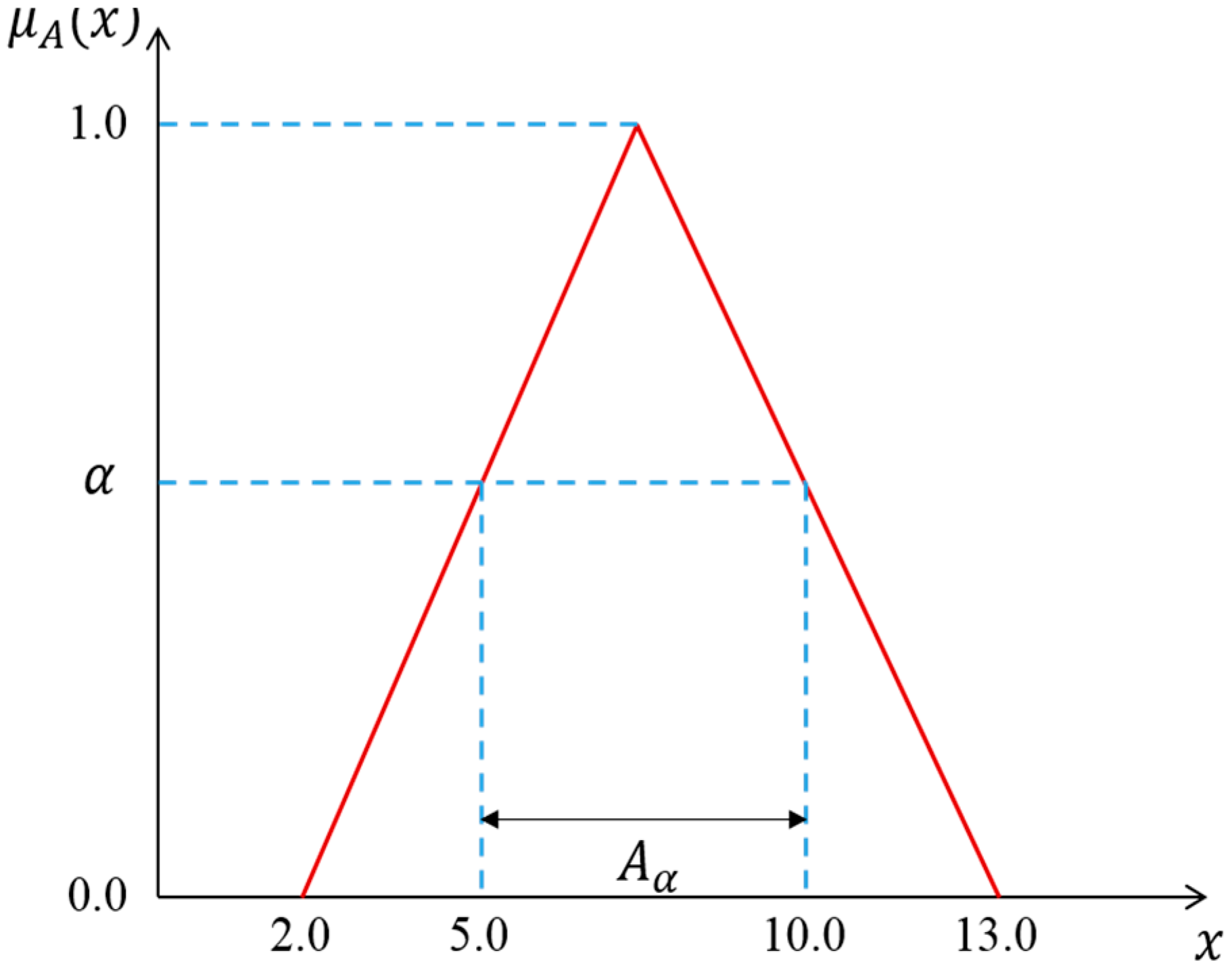

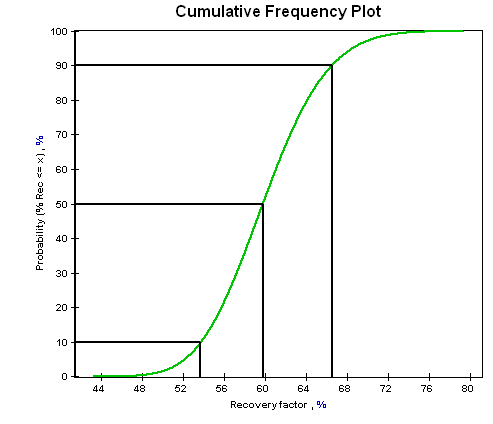

This is usually desirable, particularly in when you are performing just one simulation. Therefore, for even modest sample sizes, the Latin Hypercube method makes all or nearly all of the sample means fall within a small fraction of the standard error. The effect is that each sample (the data of each simulation) is constrained to match the input distribution very closely. We consistently see superior performance from QMC, giving 2× to 8× speedup over conventional Monte Carlo for roughly 1 accuracy levels. Instead, we have stratified random samples. We perform rigorous comparisons with both Monte Carlo and Latin hypercube sampling across a set of digital and analog circuits, in 90 and 45 nm technologies, varying in size from 30 to 400 devices. In practice, sampling with the Monte Carlo sampling method follows this pattern quite closely.īy contrast, Latin Hypercube sampling stratifies the input probability distributions. With this sampling type, or RISKOptimizer divides the cumulative curve into equal intervals on the cumulative probability scale, then takes a random value from each interval of the input distribution. We no longer have pure random samples and the CLT no longer applies. The CLT tells us that about 68% of sample means should occur within one standard error above or below the distribution mean, and 95% should occur within two standard errors above or below. If you have 100 iterations, the standard error is 20/√100 = 2. For example, with RiskNormal(655,20) the standard deviation is 20. One SEM is the standard deviation of the input distribution, divided by the square root of the number of iterations per simulation. The Central Limit Theorem of statistics (CLT) answers this question with the concept of the standard error of the mean (SEM). The question naturally arises, how much separation between the sample mean and the distribution mean do we expect? Or, to look at it another way, how likely are we to get a sample mean that’s a given distance away from the distribution mean? With enough iterations, Monte Carlo sampling recreates the input distributions through sampling. A problem of clustering, however, arises when a small number of iterations are performed.Įach simulation in or RISKOptimizer represents a random sample from each input distribution. Monte Carlo sampling refers to the traditional technique for using random or pseudo-random numbers to sample from a probability distribution. Monte Carlo sampling techniques are entirely random in principle - that is, any given sample value may fall anywhere within the range of the input distribution.

LATIN HYPERCUBE VS MONTE CARLO MANUALS

The and RISKOptimizer manuals state, “We recommend using Latin Hypercube, the default sampling type setting, unless your modeling situation specifically calls for Monte Carlo sampling.” But what’s the actual difference?

0 kommentar(er)

0 kommentar(er)